What are we doing here?

I have been introduced to far more installations of Jenkins that suck than don’t. One of the issues with a system of this level of complexity that is shared between many teams is that they tend to grow organically over time. Without much “oversight” by a person or team with a holistic view of the system and a plan for growth, these build systems can turn into spaghetti infrastructure the same way a legacy codebase can turn into spaghetti code.

Eventually failures end up evenly dividing themselves amongst infrastructure, bad commits, and flaky tests. Once this happens and trust is eroded, the only plausible fix for most consumers is to re-run the build. This lack of trust really makes it less fun to develop quality, reliable software. Its very important to have trust that the systems you are using to verify your work and not the other way around. An error in a build should unambiguously point to the code, not the system itself.

While not all companies can afford to have a designated CI team supporting the build infrastructure, it is possible to design the system initially (or refactor) in a way that encourages good practice and stability thus elevating developers’ confidence in the system and speed with which they can deliver new features. This series of posts will hopefully be able to get you started in the right direction when having to build or refactor a CI / CD system using Jenkins.

Describing the characteristics of the end state

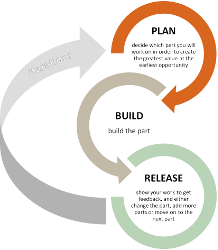

I am a big fan of Agile software development1 myself. I don’t believe that there is one kind of ‘Agile’ that works for everyone or anything like that, but I do believe 100% in the iterative approach agile takes to solve complex problems. Breaking work into small deliverables, and using those chunks for planning at multiple intervals such as:

- Yearly: Very high level direction

- Quarterly: General feature requirements

- Sprint: Feature implementation details

When you have multiple perspectives on the scope and movement of a project, it really gives you the ability to manage expectations and make the most of your time. Since you have a general idea of what the long term goals are, you (ideally) can then make trade-off decisions with accurate values on the scales. This leads to less rewrites and the ability to put a bit more into the code you write because you know it will become legacy and you know Future You will appreciate the effort.

This is perhaps in opposition to the idea of hacking your way to a solution which is also valuable process, but more for a problem with an undefined domain. Luckily shipping software has most of problem domain defined so we’re able to set long, medium, and short time goals for our CI / CD infrastructure.

Anyways, let’s state some of the properties we want from our build system:

-

Easy to develop: A common problem with complex systems is that the process of setting up a local development environment is just as complex, if not more so. We will be developing just about the entire system locally on our laptop so we should hopefully get this for minimal cost.

-

Safe to develop: Another property that goes hand in hand with easy to develop is safe to develop. If our local environment is always reaching out to prod to comment on PRs or perhaps pushing branches and sending Slack notifications, it can be misleading and confusing to figure out where exactly these things are coming from. We will attempt to null out any non-local side effects in our development environment to provide a safe place to work.

-

Consistently deployable: Hitting the button and getting an error during a deployment is one of the worst feelings ever. In most cases when we need to deploy, we need to deploy right now! While building this project we will always keep master releasable and work with our automation in a way that no matter when we need to deploy, it will go.

- Easy to upgrade: The best way to mitigate dangerous upgrades is to do them

more frequently. While this may sound counterintuitive, it works for a couple

of reasons:

- The delta is smaller the more frequently you upgrade (and deploy!)

- Each time you upgrade and deploy, you get more practice at it

- If you fix a small error each upgrade, eventually most errors are fixed :) As we will see, what enables easy upgrades is a rollback safety net and a good way to verify the changes are safe. Since we’ll never be able to catch all the bugs, having the rollback as a backup tool is clutch.

-

Programmatically configurable: This is a giant one. Humans are really terrible at doing or creating the same thing over and over. This is way worse once you have a group of humans trying to do the same thing over and over (like creating jobs or configuring the master). Since we cannot be trusted to click the right buttons in the right sequence, we need to make sure the system does not depend on us doing that. We will cover a variety of tools to configure our system including the Netflix Job DSL, Groovy configuration via the Jenkins Init system, and later on Jenkinsfiles. There should be nothing that is hand jammed!

-

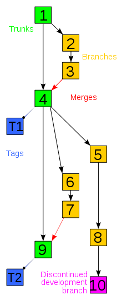

Immutable: “Immutable Infrastructure” 2 is a term that was coined by Chad Fowler back in 2013. He proposes that the benefits of immutability in software architecture (like what is brought by functional programming) would also apply to infrastructure. What this translates to is that instead of mutating a running server by applying updates, rebooting, and changing configuration on running machines, when changes need to be made we should just redeploy a new set of servers with the updated code instead. This makes it much easier to reason about what state a system is in. You will see that this is one of the main characteristics that makes this Jenkins infrastructure different (and I think much better) than a legacy installation.

-

100% in SCM: Since we are committing to programmatic configuration, it is crucial that we keep everything within source control management. We should apply the same rigor with our CI / CD system’s codebase that we do with the one it is testing. PRs and automated tests should be the norm with anything you are creating, even if it is a one man show (IMHO).

-

Secure: Security is constantly an afterthought which is a dangerous way to work in a build system. These systems are so complex and so critical to the company (they actually assemble and package the product) that we MUST make them secure by default. Sane secrets management, least access privileges, immutable systems, and forcing an audit trail all lead to a more secure and healthy environment.

- Scalable: Build systems at successful companies only grow, they do not shrink. Teams are constantly adding more code, more tests, more jobs, and more artifacts. Normally this occurs in a polyglot environment leading to exponential growth of requirements on the machines that are actually doing the builds. It does not scale to have a single server with every single requirement installed. This pattern soon becomes unwieldly and hard to work with. We should containerize everything and separate the infrastructure from the system running on top of it to allow independent and customized scaling and maintenance.

If we can build a system that meets these requirements, we will get a lot of stuff for free as well including:

- Easy disaster recovery

- Portability to different service providers

- Fun working environment

- High levels of productivity

- …

- Profit!

An iterative approach

Each iteration will build a shippable increment on top of the iteration before it and eventually we will have a production Jenkins! Along the way we will learn a bunch of stuff, build some Docker containers, write a compose file, automate some configuration, get real groovy, and much, much more. I encourage you to stay tuned and work through solving this problem with me.

All code will be published to this blog’s git repo 3 so that you can verify your answers and fix inconsistencies if you get stuck along the way. The repo should be tagged to match up with this series.

Now that we have a general idea of how we want our system to behave and how we will build it out, let’s dig into the architectural concerns.